A few month hiatus from this blog turned into five and a half years, but that is a much longer story. This one is about the state of desktop spherical displays in 2018. In 2011, I hacked together the Snow Globe spherical display from a laser pico-projector, an off the shelf fish-eye lens, a bathroom light fixture, and some shader code. I had hoped to make it easy for folks to build their own version by publishing everything, but the lens ended up being unobtanium. Judging by the comments on the post, nobody was able to properly replicate the build.

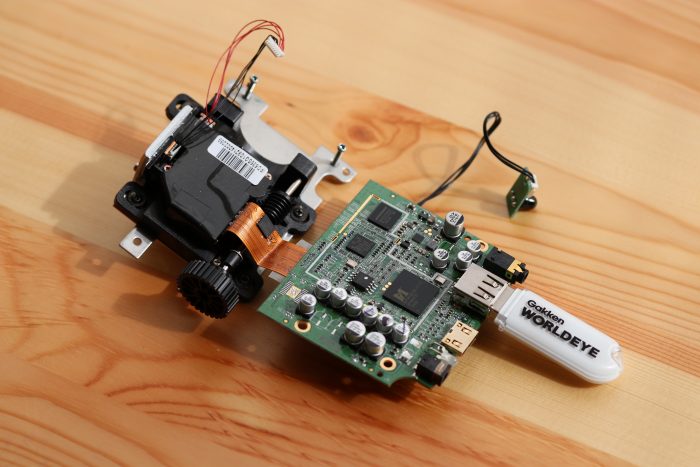

A few months ago Palmer Luckey gave me heads up that a company called Gakken in Japan had a consumer version of the idea and that like everything in the world, there were sellers on eBay and Amazon importing it into the US. The Gakken Worldeye sounded like it could fulfill the dream of a desktop spherical display, so I bought one to use and another to tear down. It ended up being a hemispherical display with a pretty decent projection surface but a terrible projector and even worse driving electronics. The guts of the sphere are above. There is a VGA resolution TI DLP that is cropped by the lens to a 480 pixel circle. The Worldeye takes 720p input over HDMI, which is then downsampled and squashed horizontally to that circle by an MStar video bridge. Between the poor projector resolution and the questionable resampling, the results look extremely blurry.

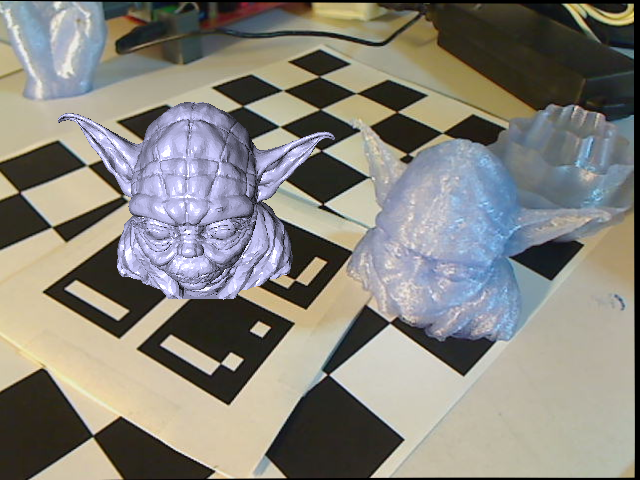

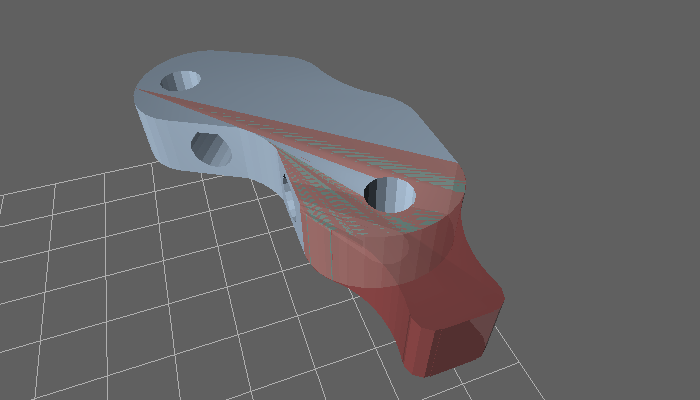

I figured it would be possible to improve on the sphere by taking advantage of the display surface and lens and swapping out the projector and electronics. In the time since the ShowWX used in the Snow Globe was released, Microvision has developed higher resolution laser scanning projector modules in conjunction with Sony and others. I picked up a Sony MP-CL1 with one of these modules, which is natively 1280×720. This should have been a decent improvement over the 848×480 in the ShowWX. I then CAD’d up and 3d printed a holder to mount it along with the original Worldeye lens into the globe.

The results are a bit underwhelming. The image looks better than the stock Worldeye, but still looks quite blurry. I realized afterwards that the sphere diameter is too small to take advantage of the projector resolution. At around a 5″ radius, the surface of the sphere is getting around 1.8 pixels per mm (assuming uniform distortion). The laser beam coming out of the projector is well over 1 mm wide, and probably closer to 1.5mm. This means that neighboring pixels are blending heavily into each other. The lens MTF is probably also pretty poor, which doesn’t help the sharpness issue. If you’re interested in trying this out anyway, the .scad and .stl files are up on Thingiverse and the code for the Science on a Snow Globe application to display equirectangular images and videos is on GitHub. The conclusion to the opening prompt is that spherical displays are more accessible in 2018 than they were in 2011, but don’t seem to be any better quality. Hopefully someone takes the initiative to solve this.