I was chatting with a new hardware entrepreneur recently who asked an excellent question: What does “Hardware is hard” really mean? It being time consuming and expensive to iterate on, not being able to ship patches, requiring specialized skillsets, and having access to a smaller pool of investors are all reasons commonly mentioned. These all relate, but at its core, what makes hardware hard is that you don’t get second chances.

Pick the wrong audience target, go to market strategy, feature set, pricing, production forecast, manufacturing methodology, manufacturing partners, or logistics partners, and you will run out of money. Worse, you can get all but one of those things right and still never make it off the ground. Startups require setting a bold hypothesis and then charting a narrow course to proving it. In many software and service businesses, you can set short milestones to test and iterate with target audiences closed-loop, course correcting rapidly to find product market fit with a small team before scaling. If you have a strong team and you’re picking good hypotheses, you’ll have a reasonable chance to get enough funding to keep going at it for some time.

With hardware, each of those is orders of magnitude more challenging. Iteration cycles are expensive, partner and technology selections can take you through one way doors you can’t afford to walk back from, and building inventory of the wrong product or for the wrong audience is fatal. Perhaps most critically, if you run out of money, you are unlikely to get funding to pivot, no matter how strong your team is.

All of that said, hardware is essential to civilization and a whole lot of fun to make. Nobody needs another Web3 NFT marketplace. Build something that matters. Here’s what you can do to minimize your likelihood of death along the way:

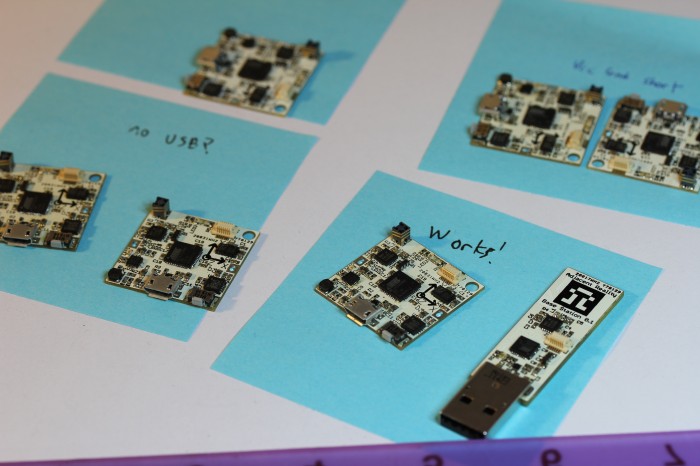

- Be extremely deliberate about your audience sequencing. Think about the first 1k, the first 10k, the first 100k, and the first 1M customers. Don’t start your company with a product or go to market strategy for the first 1M customers unless you are certain that it is what will also satisfy the first 1k. It takes brand awareness, company credibility, and ultimately the right product to fit your audiences’ needs to reach each subsequent level of scale. There are ways to streamline this that will depend on your product and category. For example, starting with an openly available development kit works well for products that will eventually have a hardware or software ecosystem attached to them (something we did successfully at Oculus).

- Deeply understand how your audiences behave today, what they are buying, and why what you are building would be enough better to make them change their behavior. Think through the purchase cycle. Does this need to be a replacement, a first product, a second product, or a toy to tinker with? I don’t generally subscribe to product/management fads, but Jobs-to-be-done has a useful framing for audience psychology with the “four forces” diagram.

- Build the right team and pick the right partners. Many hardware startups try to emulate Apple’s product and design philosophy and then replicate the staffing model that requires. Don’t. Start with the smallest possible team for what you need to differentiate on in design and engineering along with the core set of people needed to safely steer supply chain and logistics. Leverage partners for everything else. You can always in-house more in the future as you scale, but don’t make the mistake of building substantial capital infrastructure or hiring team members that you don’t need to to reach product market fit. You can solve this with a competent manufacturing partner that has expertise or at least transferable skills and infrastructure for your space. You can work with them in a “Joint Development Model”. The people you’ll work with there will be carrying in an enormous amount of collective experience and team cohesion, won’t need equity, can move fast, and will generally require less OpEx than hiring the equivalent capability in house.

- Think carefully about funding and runway. Unless you can afford to self-fund your company to profitability, make sure you’re actively having discussions with potential investors as you plan, whether angels or institutional VCs. Lay out your hypotheses around product roadmap and audience scaling and the funding milestones that go with them. Critically, make sure plausible investors agree that those are sensible milestones, otherwise you’ll end up at one without the cash to go further.

- Find ways to get the first 1k or 10k customers to help fund the business. Never use equity to fund inventory. Think carefully about how you can generate enough credibility to get 1k or 10k people to put money down on a product that hasn’t been manufactured yet. That is most often in the form of pre-orders. Credibility generation may be through seeding development units to influential users or press in the space, though a limited developer kit program, through a community beta program, or other creative paths. If you can’t get 1k customers to take the bet and spend money on your product, investors probably shouldn’t place a bet on you either.

This is by no means a comprehensive list of tactics, but a condensed set of viewpoints around a topic that could fill a book. Note that all of the numbers above assume a consumer hardware product in a large category. Feel free to scale the numbers appropriately for enterprise/commercial/niche hardware. If you’re starting a hardware startup, especially in consumer, and you’d like feedback on how you’re approaching it, feel free to send me an email. I applied each of the above to Framework, and would be happy to help check for startup-advice-fit on other companies.