When looking into small form factor cases to build a Mini-ITX PC for my Rift, I found a few things:

- Like any other hobby, there is an obsessive (in a good way) community of small form factor enthusiasts.

- The metric they optimize for is case size in liters.

- Often, people are stuck sub-optimally limiting their component selection to the case they want or their case selection to fit the components they have.

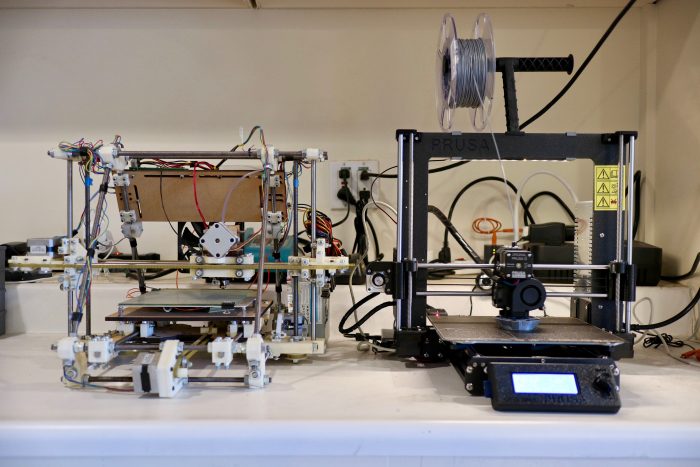

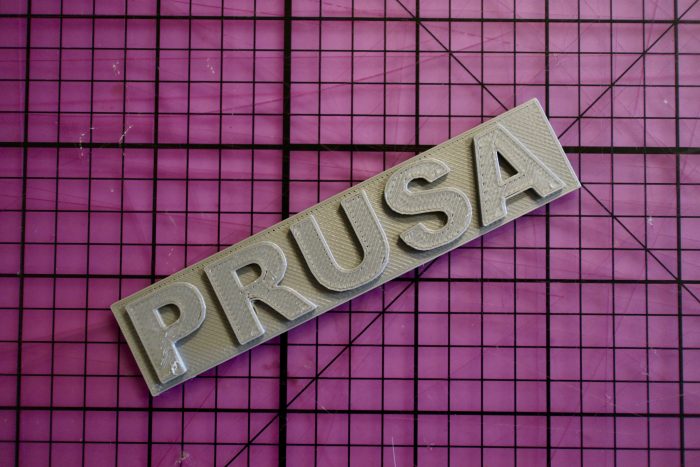

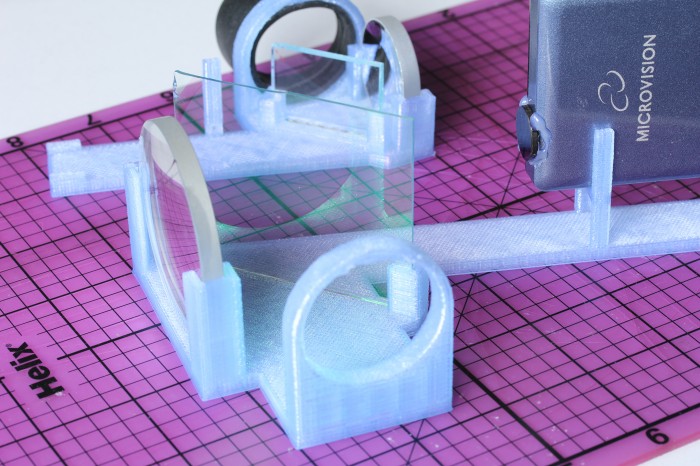

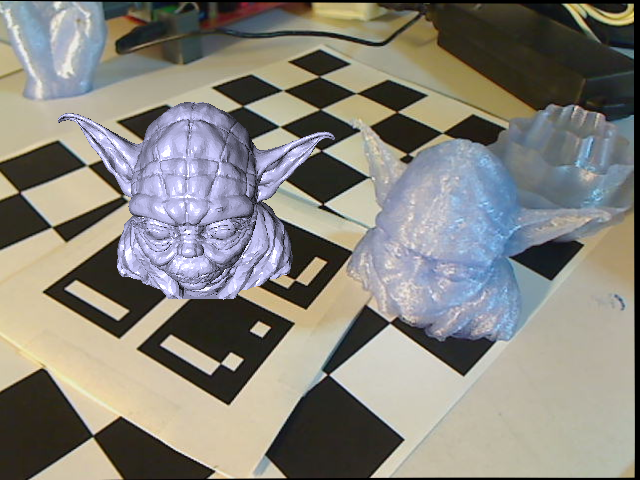

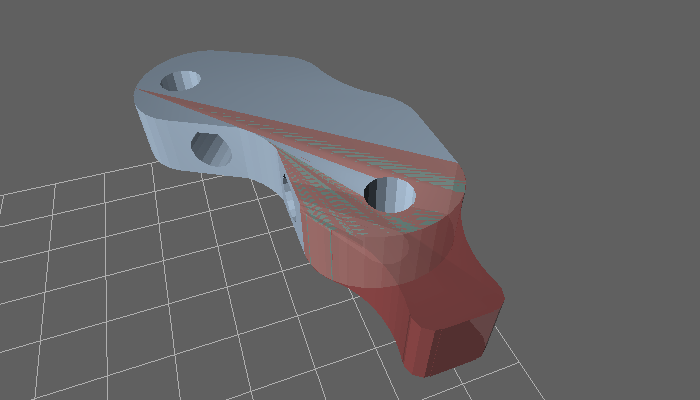

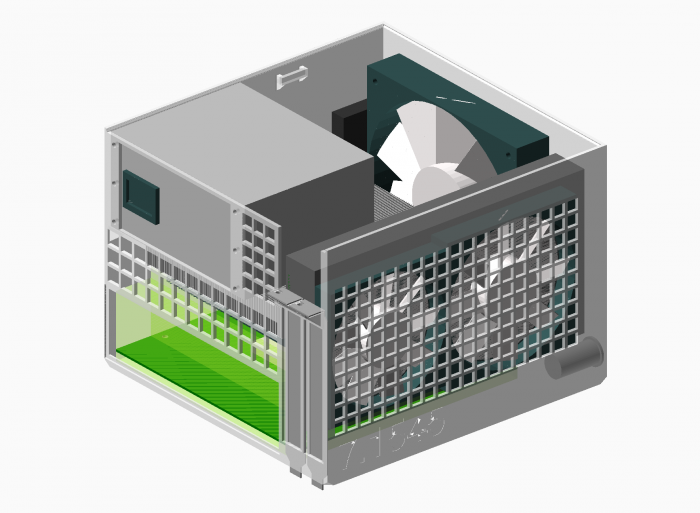

Rather than limiting choice or ending up with a larger than desired case, why not make your case exactly match the size of the components you want with no wasted space? It turns out that a Mini-ITX motherboard, SFX power supply, and short GPU just barely fit within the bounds of a Prusa i3 MK3 3D printer, so I decided to solve exactly that with an open source fully parametric printable case in OpenSCAD. That means you can input the components you have or edit a few dimensions and output a bespoke case that fits them perfectly. To win community brownie points, the volume of the case is also automatically generated and embossed on the side.

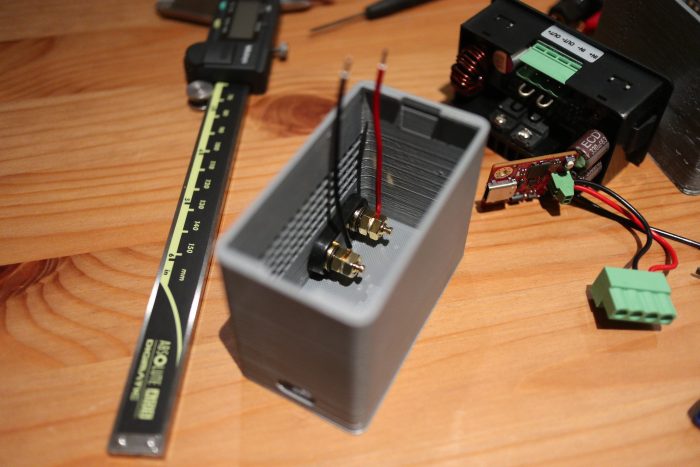

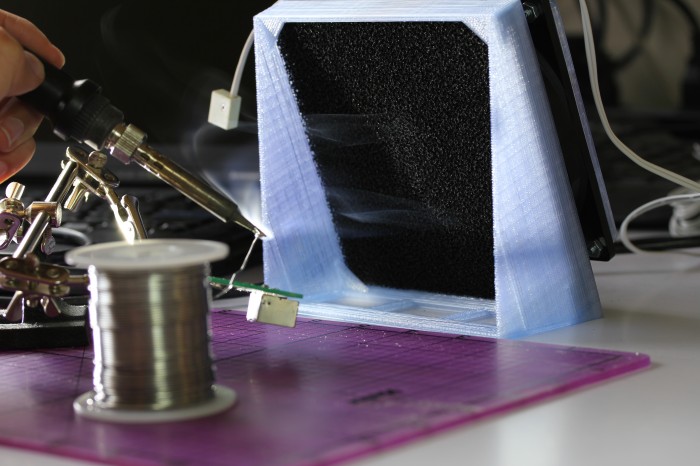

Partly for rigidity and partly for simplicity of design and assembly, I decided to make it effectively a bucket with most of the case being a single print. I started with a traditional “shoebox” layout to keep it simple as well. The only other parts are the lid and optional feet (printable in flexible material like TPU). I also used threaded inserts rather than screwing into plastic to allow re-assembly without destroying the case.

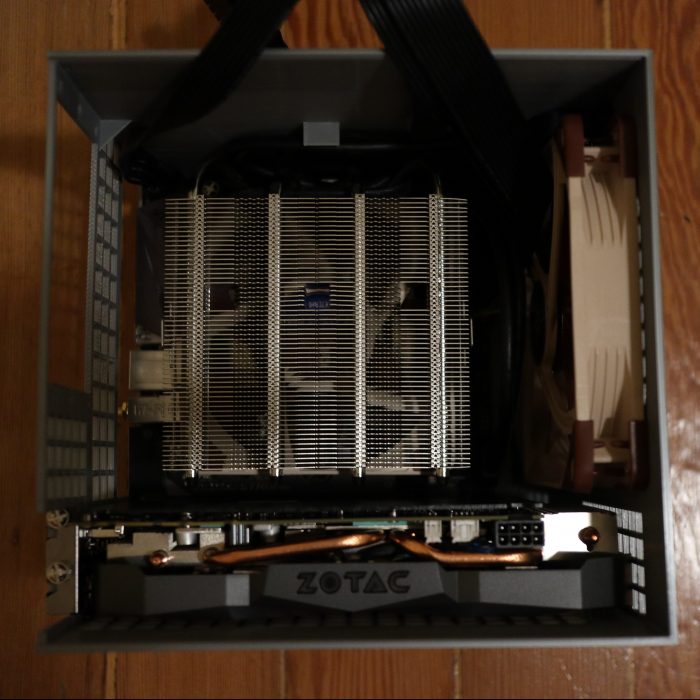

I referenced the Mini-ITX and PCI-e specs to get the proper dimensions, and measured the components I had on hand and pulled some datasheets online for specifics on heatsinks and the GPU. There is pretty good ventilation all around, with the default configuration that fits my components having a 140mm intake fan and a mostly isolated GPU with dedicated intake and exhaust.

It took me three or four iterations of prints (~36 hours and ~400g/$8 of plastic each) to get to a level of completeness that I’m happy with using and publishing, but there is certainly more to improve. Since it is open source, revisions and fixes are welcome.

I tried to make it as simple as possible to customize by having keyword fields for the power supply type and heatsink chosen. The PSU can be SFX, SFX-L, or FlexATX, and heatsink can be a 120mm AIO, Noctua NH-L12s, Noctua NH-U9s, or Cryorig C7. If you have any of those and the same GPU I have (Zotac 1080 Mini), you can just edit the keywords and the case will be automatically generated to fit them. If you want to make deeper changes or use different components, you can do so by editing the .scad files.

traditional(show_body = true, show_lid = false, show_internals = false, heatsink_type = "noctua_nh_l12s", psu_type = "sfx");

The full CAD, example ready-to-print .stl files, and instructions are up on GitHub, licensed under an Open Source 2-Clause BSD license. You can also follow along the development thread at SFF Forum.