Math. It turns out its not quite like riding a bike. A year since college, and two since my last computer vision course, my knowledge of linear algebra is basically nil. Several projects I’m stewing on are bottlenecked on this. I decided to relearn some basics and create a tool I’ve wanted for a while, a method to quickly and easily calculate the homography between a camera and a projector. That is, a transformation that allows you to map points from the camera plane to the display plane. This opens up a world of possibilities, like virtual whiteboards and interactive displays.

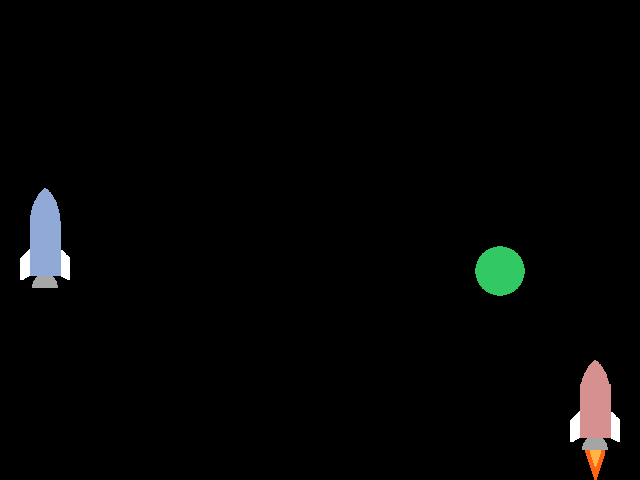

I won’t go into detail about deriving the transformation matrix, as there is information elsewhere better than I could present. The calculation requires four or more matching point pairs between the two planes. Finding the points manually is a pain, so I wrote a script that uses Pygame and NumPy to do it interactively. The script works as follows:

- Point an infrared camera at a projector screen, with both connected to the same computer.

- Run the script.

- Align an lit IR LED to the green X on the projector screen, and press any key.

- Repeat step 3 until you have four points (or more, depending on the script mode), at which point,

- The script will calculate the homography, print it out, and save it as a NumPy file.

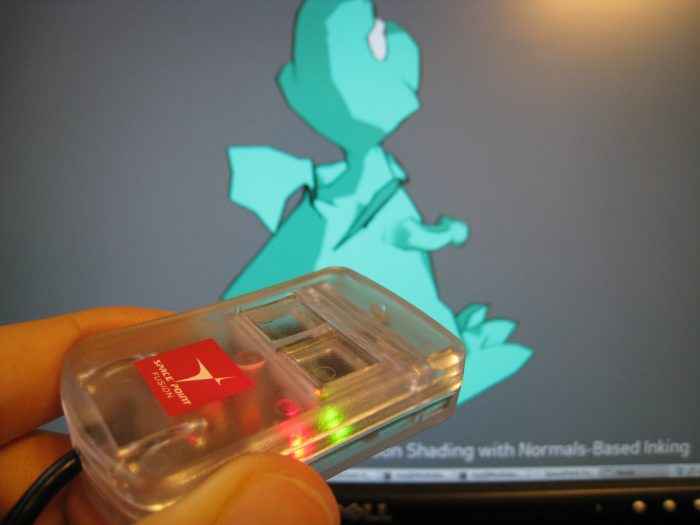

The script in its current form uses any Pygame supported infrared camera. This today is likely a modded PS3 Eye or other webcam, unless you’re lucky enough to have a Point Grey IR camera. I do not, so I hot glued an IR filter from eBay to the front of my Eye, that I may have forgotten to return to CMU’s ECE Department when I graduated. Floppy disk material and exposed film can also function as IR filters on the cheap, just be sure to pop the visible light filter out of the camera first.

It would be overly optimistic of me to believe there are many people in the world with both the hardware and the desire to run this script. Luckily, due to the magic of open source software and the modularity of Python, individual classes and methods from the file are potentially useful. It should also relatively straightforward to modify to accept other types of input, like a regular webcam with color tracking or a Wii Remote. I will add the latter myself if I can find a reasonable Python library to interface one with.

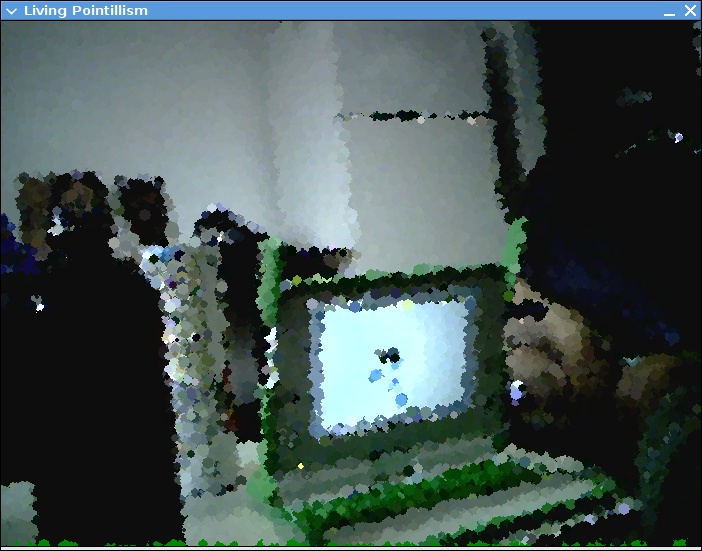

Once you have the transformation matrix, you can simply dot the matrix with the point from the camera to get the corresponding point on the projector. The blackboard script, demonstrated by my roommate above and downloadable below shows a use case for this, a drawing app using an IR LED as a sort of spray can. The meat of it, to convert a point from camera to projector coordinates, is basically:

# use homogeneous coordinates

p = numpy.array([point[0],point[1],1])

# convert the point from camera to display coordinates

p = numpy.dot(matrix,p)

# normalize it

point = (p[0]/p[2], p[1]/p[2]) |

# use homogeneous coordinates

p = numpy.array([point[0],point[1],1])

# convert the point from camera to display coordinates

p = numpy.dot(matrix,p)

# normalize it

point = (p[0]/p[2], p[1]/p[2])

The homography.py script takes the filename of the matrix file to output to as its only argument. It also has an option, “-l”, that allows you to use four or more points randomly placed around the screen, rather than the four corner points. This could come in handy if your camera doesn’t cover the entire field of view of the projector. You can hit the right arrow key to skip points you can’t reach and escape to end and calculate. The blackboard.py script takes the name of the file the homography script spits out as its only argument. Both require Pygame 1.9 or newer, as it contains the camera module. Both are also licensed for use under the ISC license, which is quite permissive.

nrp@Mediabox:~/Desktop$ python homography.py blah

First display point (0, 0)

Point from source (18, 143). Need more points? True

New display point (1920, 0)

Point from source (560, 137). Need more points? True

New display point (0, 1080)

Point from source (32, 446). Need more points? True

New display point (1920, 1080)

Point from source (559, 430). Need more points? False

Saving matrix to blah.npy

array([[ 3.37199729e+00, -1.55801855e-01, -3.84162860e+01],

[ 3.78207304e-02, 3.41647264e+00, -4.89236361e+02],

[ -6.36755677e-05, -8.73581448e-05, 1.00000000e+00]])

nrp@Mediabox:~/Desktop$ python blackboard.py blah.npy |

nrp@Mediabox:~/Desktop$ python homography.py blah

First display point (0, 0)

Point from source (18, 143). Need more points? True

New display point (1920, 0)

Point from source (560, 137). Need more points? True

New display point (0, 1080)

Point from source (32, 446). Need more points? True

New display point (1920, 1080)

Point from source (559, 430). Need more points? False

Saving matrix to blah.npy

array([[ 3.37199729e+00, -1.55801855e-01, -3.84162860e+01],

[ 3.78207304e-02, 3.41647264e+00, -4.89236361e+02],

[ -6.36755677e-05, -8.73581448e-05, 1.00000000e+00]])

nrp@Mediabox:~/Desktop$ python blackboard.py blah.npy

Download:

homography.py

blackboard.py