I have probably stated in the past that I don’t do 3d. As of a few months ago, this is no longer accurate. Between Deep Sheep, a computer graphics course, and a computer animation course, I have grown an additional dimension. This dimension is now bearing dimensional fruit.

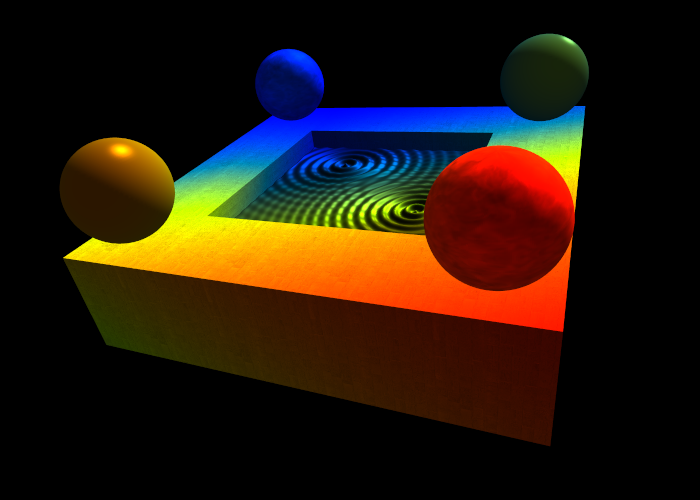

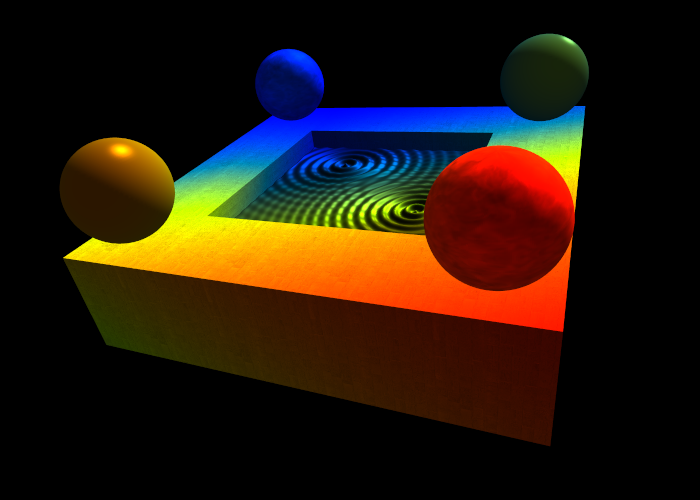

After watching Coraline in 3d over spring break, I became obsessed with the possibilities of 3d. Of course, as a student, I can’t quite afford fancy glasses or a polarized projector. My budget is a bit closer to the free red/blue glasses one might find in a particularly excellent box of cereal. Searching around the internet, and eBay specifically, I came across a seemingly voodoo-like 3d technology I’d never heard of: cardboard glasses with clear looking plastic sheet lenses that could turn hand drawn images 3d. This technology is ChromaDepth, which makes red objects appear to float in air, while blue objects seem to recede into the background. Essentially, it uses prisms to offset red and blue light by different amounts on each eye, giving an illusion of depth as your brain attempts to perceive it. So, creating a ChromaDepth image is just a matter of coloring objects at different distances differently, which is something computers are great at!

Of course, I am far from the first person to apply a computer to this. Mike Bailey developed a cool solution in OpenGL a decade ago which maps an HSV color strip texture based on the object’s depth in the image. The downside there is that objects can no longer have actual textures. Textures are pretty tricky with ChromaDepth, in that changing the color of an object will throw off the depth.

I wrote fragment and vertex shaders in GLSL that resolve this problem. The hue of the resulting fragments depends only on the distance from the camera in the scene, with closer objects appearing redder continuing through the spectrum to blue objects in the distance. The texture, diffuse lighting, specular lighting and material properties of the object set the brightness of the color, giving the illusion of shading and texture while remaining ChromaDepth safe. My code is available below. Sticking with the theme of picking a different license for each work, this is released under WTFPL. I can’t release the source of the OpenGL end of it, as it is from a school project. It should be fairly simple to drop into anything though. You will need ChromaDepth glasses to see the effect, which you can get on eBay or elsewhere for <$3 each.

Download:

ChromaDepth GLSL Vertex Shader

ChromaDepth GLSL Fragment Shader