After getting my Lytro camera yesterday, I set about answering the questions about the light field capture format I had from the last time around. Lytro may be focusing (pun absolutely intended) on the Facebook using crowd with their camera and software, but their file format suggests they don’t mind nerds like us poking around. The file structure is the same as what they use for their compressed web display .lfp files, complete with a plain text table of contents, so I was able to re-use the lfpsplitter tool I wrote earlier with some minor modifications. The README with the tool describes in detail the format of the file and how to parse it.

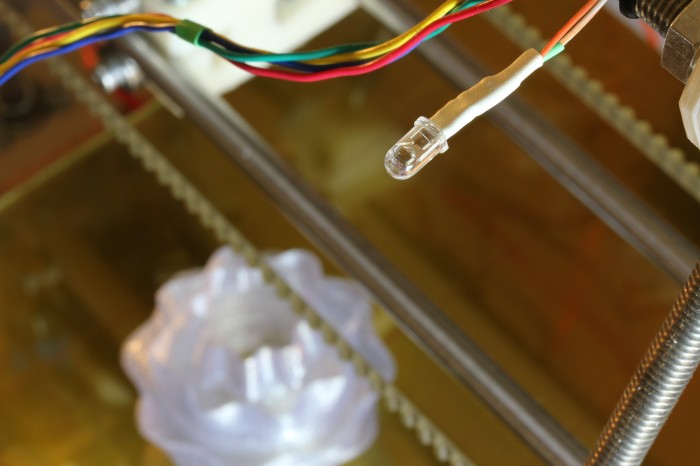

The table of contents in the raw .lfp files gives away most of the camera’s secrets. It contains a bunch of useful metadata and calibration data like the focal length, sensor temperature, exposure length, and zoom length. It also gives away the fact that the camera contains a 3 axis accelerometer, storing the orientation of the camera with respect to gravity in each image. The physical sensor is 3280 by 3280 pixels, and the raw file just contains a BGGR Bayer array of it at 12 bits per pixel. Saving the array and converting it to tif using the raw2tiff command below shows that each microlens is about 10 pixels in diameter with some vignetting on the edges.

raw2tiff -w 3280 -l 3280 -d short IMG_0004_imageRef0.raw output.tif |

Syncing the camera to Lytro’s desktop software backs it up the first time. Amazingly, the backup file uses the same structure as both .lfp file types. The file contains a huge amount of factory calibration data like an array of hot or stuck pixels and color calibration under different lighting conditions. Incredibly, it also lets loose that there is functioning Wi-Fi on board the camera with files named “C:\\CALIB\\WIFI_PING_RESULT.TXT” and “C:\\CALIB\\WIFI_MAC_ADDR.TXT”, which matches what the FCC teardowns show. There is no mention of Bluetooth support though, despite support by the chipset. In any case, it seems there is a lot of cool stuff coming via firmware updates.

Hopefully one of those updates enables a USB Mass Storage mode, as there does not appear to be any way to get files off of the camera in Linux. I had to borrow my roommate’s MacBook Air for this escapade. The camera shows up as a SCSI CD drive, but mounting /dev/sr0 only shows a placeholder message intended for Windows users.

Thank you for purchasing your Lytro camera. Unfortunately, we do not have a

Windows version of our desktop application at this time. Please check out

http://support.lytro.com for the latest info on Windows support.

It was pretty trivial to write the lfpsplitter to get the raw data shown above, but doing anything useful with it will take more effort. Normally simple stuff like demosiacing the Bayer array will likely be complicated by the need to avoid the gaps between microlenses and not distort the ray direction information. Getting high quality results will probably also require applying the calibration information from the camera backups. A first party light field editing library would be wonderful, but Lytro probably has other priorities.

You can grab my lfpsplitter tool from GitHub at git://github.com/nrpatel/lfptools.git and I uploaded an example .lfp you can use with it if you want to play with light field captures without the $400 hardware commitment.