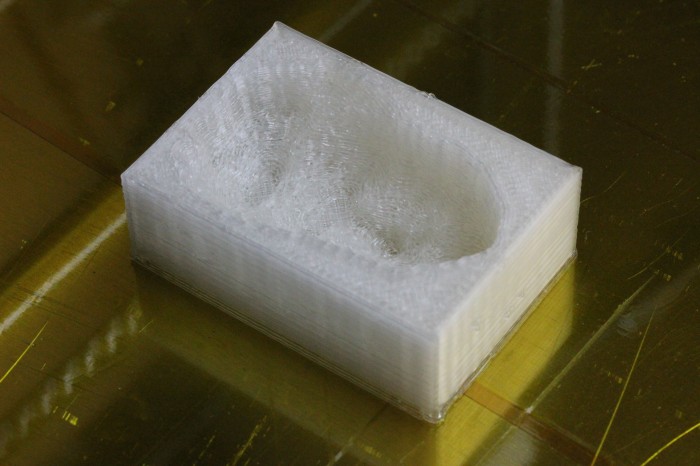

Since my seemingly fragile 3D printer had never left my desk before and even in prime condition could only print an object every 10 minutes or so, I decided that I needed a backup project for the Bay Area Maker Faire last month. I conscripted Will to help me out on a purely software Kinect based project. After downscoping our ideas several times as the Faire weekend approached, we eventually settled on generating Minecraft player skins of visitors. The printer ended up working fine (and more reliably than the software only project), but the Minecraft “Maker Ant Farm” was more of a crowd pleaser.

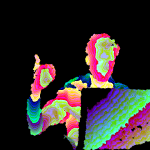

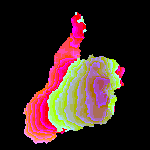

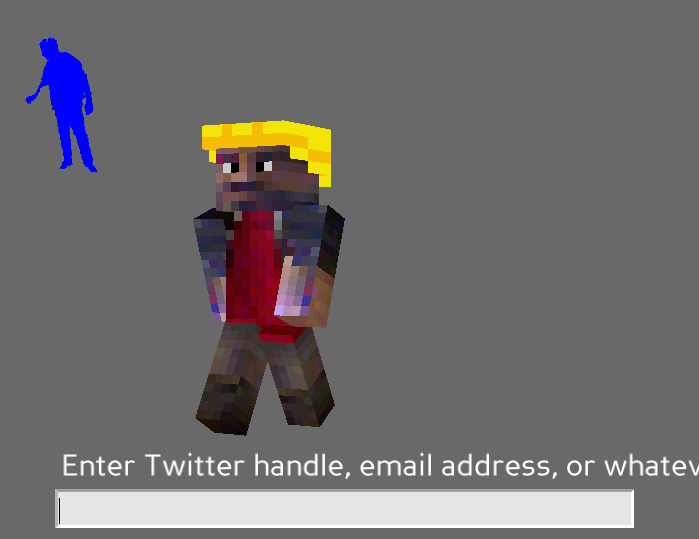

A visitor would stand in front of the Kinect and enter fieldgoal/psi calibration pose. We used OpenNI and NITE to find their pose and segment them out of the background for a preview display. Using OpenCV, we mapped body parts to the corresponding sections of the Minecraft skin texture. Since we could only see the fronts and parts of the sides of a person, we just made up what the back would look like based on the front. This was of course imprecise and resulted heads that often looked like they had massive bald spots. Rather than trying to write some kind of intelligent texture fill algorithm on a short schedule, we just gave all of the skins yellow hard hats (not blonde hair, contrary to popular opinion). After generating the skin, we loaded it back onto ShnitzelKiller’s player rig in Panda3D. I had planned on writing full skeletal tracking for the rig, but ran out of time and settled on just having it follow the position and rotation of the user and perform an animated walk. After walking around a bit watching a low res version of him or herself, the user could enter in a Twitter handle or email address to keep the skin. The blocky doppelgänger was then dropped onto a Minecraft server instance we had running as a bot that did simple things like walk around in circles or drown.

Despite some crashiness in NITE and the extremely short timeframe we wrote the project in, it ended up working reasonably well. Thanks to the low resolution style and implied insistence on imagination in Minecraft, the players avoid looking like the ghastly zombies in Kinect Me. You can see examples of some of the generated skins on @MakerAntFarm. I hate not releasing code, but I almost hate releasing this code more. It is very likely to be the worst I have ever hacked together, and I can’t help but suspect it will be held against me at some point. Nonetheless, for the greater good, it’s up on github. There are vague instructions on how one might use it in the README. Good luck, and I’m sorry.