This project is a tangent off of something cool I’ve been hacking on in small pieces over the last few months. I probably would not have gone down this tangent had it not been for the recent publication of Fabricate Yourself. Nothing irks inspires me more than when someone does something cool and then releases only a description and pictures of it. Thus, I’ve written FaceCube, my own open source take on automatic creation of solid models of real life objects using the libfreenect python wrapper, pygame, NumPy, MeshLab, and OpenSCAD.

The process is currently multi-step, but I hope to have it down to one button press in the future. First, run facecube.py, which brings up a psychedelic preview image showing the closest 10 cm of stuff to the Kinect. Use the up and down arrow keys to adjust that distance threshold. Pressing spacebar toggles pausing capture to make it easier to pick objects. Click on an object in the preview to segment it out. Everything else will disappear; clicking elsewhere will clear the choice. You can still use the arrow keys while it is paused and segmented to adjust the depth of what you want to capture. You can also use the H and G keys to adjust hole filling to smooth out noise and fill small holes in the object. If the object is intended to have holes in it, press D to enable donut mode, which leaves the holes open. Once you are satisfied, you can press P to take a screenshot or S to save the object as a PLY format point cloud.

You can then open the PLY file in MeshLab to turn it into a solid STL. I followed a guide to figure out how to do that and created a filter script attached below. To use it, click Filters -> Show current filter script, click Open Script, choose meshing.mlx, and click Apply Script. You may have to click in the preview, but after a few seconds, it will say that it Successfully created a mesh. You can click Render -> Render Mode -> Flat Lines to see what it looks like. You can then click File -> Save As, and save it as an STL. You can probably get better results if you manually pick the right filters for your object, but this script will be enough most of the time.

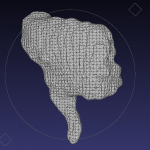

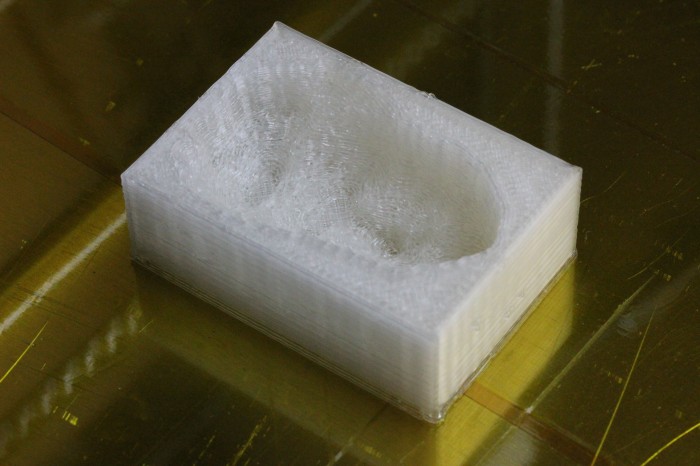

You can then open the STL in OpenSCAD or Blender and scale it and modify to your heart’s (or printer’s) content. Of course, the real magic comes from when you take advantage of all that OpenSCAD has to offer. Make a copy of yourself frozen in carbonite, put your face on a gear, or make paper weights shaped like your foot. This is also where the name FaceCube comes from. My original goal going into this, I think at my roommate’s suggestion, was to create ice cube trays in the shapes of people’s faces. This can be done very easily in OpenSCAD, involving just subtracting the face object from a cube.

difference() { cube([33,47,17]); scale([0.15,0.15,0.15]) translate([85,140,120]) rotate([180,0,0]) import_stl("face.stl"); } |

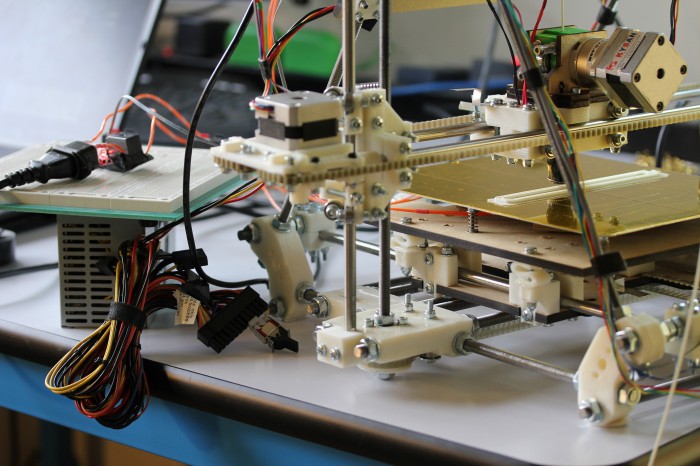

Since all of the cool kids are apparently doing it, I’ve put this stuff into a GitHub repository. Go ahead and check it out, err… git clone it out. The facecube.py script requires the libfreenect from the unstable branch and any recent version of pygame, numpy, and scipy. You’ll need any recent version of MeshLab or Blender after that to do the meshing. I’ve been using this on Ubuntu 10.10, but it should work without much trouble on Windows or OS X. The latest code will be on git, but if you are averse to it for whatever reason, I’ve attached the script and the meshlab filter script below. Since Thingiverse is the place for this sort of thing, I’ve also posted it along with some sample objects as thing:6839.

Download:

git clone git@github.com:nrpatel/FaceCube.git

facecube.py

meshing.mlx